Apache Kylin : Analytical Data Warehouse for Big Data

Page History

...

| Code Block | ||

|---|---|---|

| ||

kylin.engine.spark-conf.spark.executor.instances kylin.engine.spark-conf.spark.executor.cores kylin.engine.spark-conf.spark.executor.memory kylin.engine.spark-conf.spark.executor.memoryOverhead kylin.engine.spark-conf.spark.sql.shuffle.partitions kylin.engine.spark-conf.spark.driver.memory kylin.engine.spark-conf.spark.driver.memoryOverhead kylin.engine.spark-conf.spark.driver.cores |

Driver memory base is 1024M, it will adujst by the number of cuboids. The adjust strategy is define in KylinConfigBase.java

Cubing Step : Build by layer

- Reduced build steps

- From ten-twenty steps to only two steps

- Build Engine

- Simple and clear architecture

- Spark as the only build engine

- All builds are done via spark

- Adaptively adjust spark parameters

- Dictionary of dimensions no longer needed

- Supported measures

- Sum

- Count

- Min

- Max

- TopN

- CountDictinct(Bitmap, HyperLogLog)

Cubiod Storage

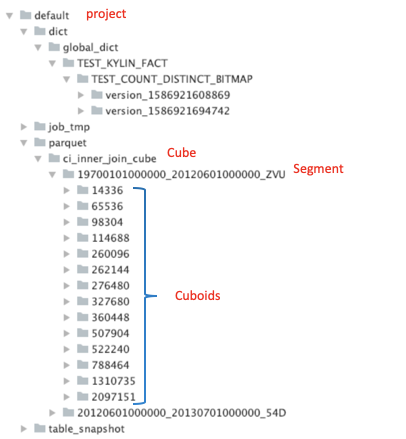

The flowing following is the tree of parquet storage dictory in FSdirectory in FileSystem. As we can see, cuboids are saved into path spliced by specified by Cube Name, Segment Name and Cuboid Id, which is processed by PathManager.java .

Parquet file schema

If there is a dimension combination of columns[id, name, price] and measures[COUNT, SUM], then a parquet file will be generated:

Columns[id, name, age] correspond to Dimension[2, 1, 0], measures[COUNT, SUM] correspond to [3, 4]

Part III . Reference

...